Overview

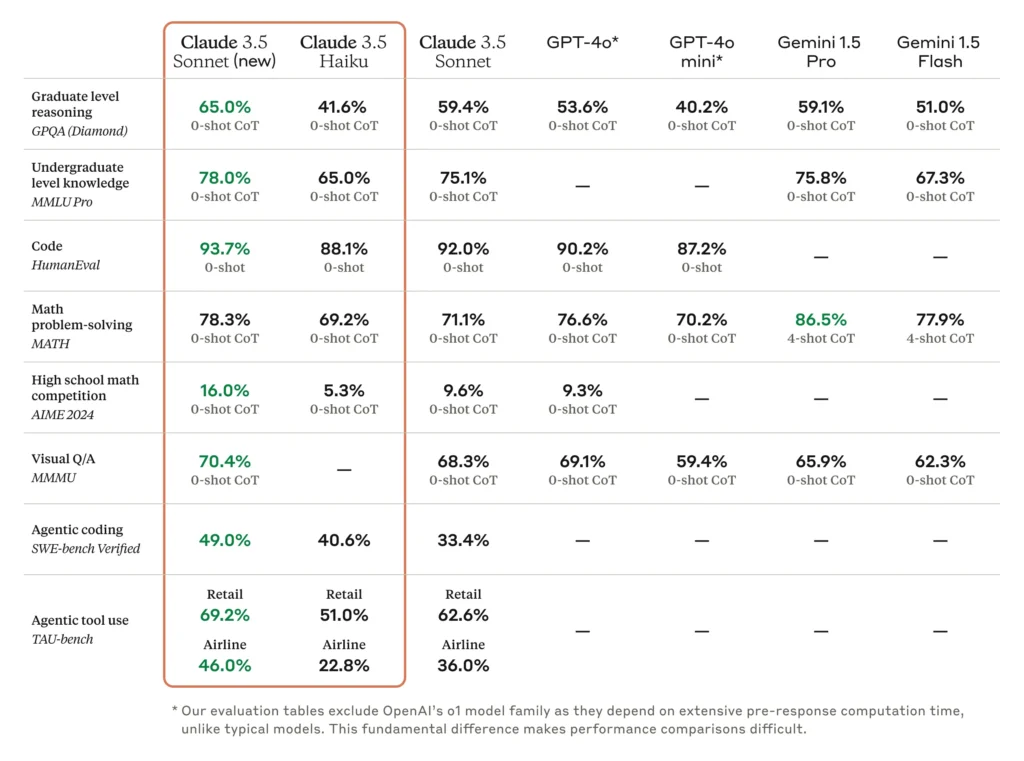

The latest benchmark results reveal significant advancements in AI capabilities, with Claude 3.5 Sonnet (new) and Claude 3.5 Haiku showing remarkable improvements across various performance metrics. This analysis explores their capabilities compared to other leading AI models including GPT-4o and Gemini 1.5.

Benchmark Performance Breakdown

1. Graduate Level Reasoning (GPQA Diamond)

- Claude 3.5 Sonnet (new): 65.0%

- Claude 3.5 Haiku: 41.6%

- GPT-4o*: 53.6%

- Gemini 1.5 Pro: 59.1%

Key Insight: The new Claude 3.5 Sonnet leads in graduate-level reasoning tasks, showing a significant improvement over its competitors with a 65.0% score on GPQA Diamond.

2. Undergraduate Knowledge (MMLU Pro)

- Claude 3.5 Sonnet (new): 78.0%

- Claude 3.5 Haiku: 65.0%

- Gemini 1.5 Pro: 75.8%

Notable Achievement: Claude 3.5 Sonnet demonstrates superior undergraduate-level knowledge, outperforming Gemini 1.5 Pro by 2.2 percentage points.

3. Coding Capabilities (HumanEval)

- Claude 3.5 Sonnet (new): 93.7%

- Claude 3.5 Haiku: 88.1%

- GPT-4o*: 90.2%

Breakthrough: Claude 3.5 Sonnet sets a new industry standard in coding tasks, achieving an exceptional 93.7% score on HumanEval.

4. Mathematical Problem-Solving (MATH)

- Claude 3.5 Sonnet (new): 78.3%

- Claude 3.5 Haiku: 69.2%

- Gemini 1.5 Pro: 86.5%

Competitive Edge: While Gemini 1.5 Pro leads in math problem-solving, Claude 3.5 Sonnet shows strong performance in 0-shot scenarios.

5. Agentic Capabilities

SWE-bench Verified

- Claude 3.5 Sonnet (new): 49.0%

- Claude 3.5 Haiku: 40.6%

- Original Claude 3.5 Sonnet: 33.4%

TAU-bench Performance

Retail Domain

- Claude 3.5 Sonnet (new): 69.2%

- Claude 3.5 Haiku: 51.0%

- Original Claude 3.5 Sonnet: 62.6%

Airline Domain

- Claude 3.5 Sonnet (new): 46.0%

- Claude 3.5 Haiku: 22.8%

- Original Claude 3.5 Sonnet: 36.0%

Key Model Differentiators

Claude 3.5 Sonnet (new)

- Superior Coding Capabilities

- Industry-leading performance on HumanEval (93.7%)

- Significant improvement in SWE-bench Verified (49.0%)

- Enhanced Reasoning

- Top performance in graduate-level reasoning

- Strong undergraduate knowledge demonstration

- Improved Tool Usage

- Notable gains in both retail and airline domains

- Enhanced computer interface navigation capabilities

Claude 3.5 Haiku

- Efficiency Optimized

- Matches Claude 3 Opus performance

- Maintains high speed and cost-effectiveness

- Strong Coding Performance

- 88.1% on HumanEval

- 40.6% on SWE-bench Verified

- Balanced Capabilities

- Competitive performance across all benchmarks

- Optimized for real-time applications

Computer Use Capabilities

Performance Metrics

- Screenshot-only category: 14.9%

- Extended steps scenario: 22.0%

Key Applications

- Interface Navigation

- Cursor movement

- Button interaction

- Text input capabilities

- Task Automation

- Form filling

- Data transfer

- Web navigation

Industry Applications and Impact

Development and Testing

- Enhanced DevSecOps capabilities

- Improved code generation and review

- Automated testing procedures

Business Process Automation

- Streamlined workflow management

- Reduced manual intervention

- Improved accuracy in repetitive tasks

Research and Analysis

- Advanced data processing

- Complex problem-solving

- Enhanced decision support

Future Implications

Technical Evolution

- Continued improvement in computer interaction

- Enhanced task completion capabilities

- Reduced error rates in complex operations

Industry Integration

- Broader adoption across sectors

- Enhanced automation possibilities

- Improved human-AI collaboration

Head-to-Head Model Comparisons

Claude 3.5 Sonnet (new) vs. Claude 3.5 Haiku

Advantages of Claude 3.5 Sonnet (new)

- Higher graduate reasoning: 65.0% vs. 41.6% (+23.4%)

- Superior undergraduate knowledge: 78.0% vs. 65.0% (+13%)

- Better coding performance: 93.7% vs. 88.1% (+5.6%)

- Stronger math problem-solving: 78.3% vs. 69.2% (+9.1%)

- Higher agentic coding: 49.0% vs. 40.6% (+8.4%)

- Better retail tool use: 69.2% vs. 51.0% (+18.2%)

- Superior airline tool use: 46.0% vs. 22.8% (+23.2%)

Advantages of Claude 3.5 Haiku

- Faster processing speed

- Lower computational requirements

- More cost-effective for routine tasks

Claude 3.5 Sonnet (new) vs. Original Claude 3.5 Sonnet

Advantages of Claude 3.5 Sonnet (new)

- Higher graduate reasoning: 65.0% vs. 59.4% (+5.6%)

- Better undergraduate knowledge: 78.0% vs. 75.1% (+2.9%)

- Improved coding: 93.7% vs. 92.0% (+1.7%)

- Enhanced math solving: 78.3% vs. 71.1% (+7.2%)

- Superior agentic coding: 49.0% vs. 33.4% (+15.6%)

- Better retail tool use: 69.2% vs. 62.6% (+6.6%)

- Improved airline tool use: 46.0% vs. 36.0% (+10%)

Areas of Similarity

- Similar processing speed

- Comparable pricing

- Same baseline capabilities

Claude 3.5 Sonnet (new) vs. GPT-4o*

Advantages of Claude 3.5 Sonnet (new)

- Higher graduate reasoning: 65.0% vs. 53.6% (+11.4%)

- Superior coding performance: 93.7% vs. 90.2% (+3.5%)

- Higher visual Q/A: 70.4% vs. 69.1% (+1.3%)

- Available agentic coding and tool use metrics

Advantages of GPT-4o*

- Slightly better math problem-solving: 76.6% vs. 78.3% (-1.7%)

Not Comparable

- Undergraduate knowledge (GPT-4o* data not available)

- Several other metrics where GPT-4o* data is missing

Claude 3.5 Sonnet (new) vs. Gemini 1.5 Pro

Advantages of Claude 3.5 Sonnet (new)

- Higher graduate reasoning: 65.0% vs. 59.1% (+5.9%)

- Better undergraduate knowledge: 78.0% vs. 75.8% (+2.2%)

- Available coding metrics (vs. no data for Gemini)

- Higher visual Q/A: 70.4% vs. 65.9% (+4.5%)

Advantages of Gemini 1.5 Pro

- Superior math problem-solving: 86.5% vs. 78.3% (+8.2%)

Claude 3.5 Haiku vs. GPT-4o

Advantages of Claude 3.5 Haiku

- Higher coding performance: 88.1% vs. 87.2% (+0.9%)

- Available undergraduate knowledge metrics

- Available agentic capabilities

Advantages of GPT-4o

- Better graduate reasoning: 40.2% vs. 41.6% (-1.4%)

- Slightly better math solving: 70.2% vs. 69.2% (+1%)

Common Trends Across All Models

Strengths of Claude Family

- Coding Capabilities

- Consistently higher performance in coding tasks

- Better agentic coding abilities

- Superior tool use metrics

- Knowledge Assessment

- Strong performance in both graduate and undergraduate levels

- Comprehensive coverage across different knowledge domains

- Tool Use and Automation

- Leading capabilities in computer interaction

- Better performance in complex task automation

Areas of Competition

- Mathematical Processing

- Varied performance across models

- Gemini 1.5 Pro showing strength in this area

- Visual Processing

- Close competition in visual Q/A tasks

- Small margins between top performers

- Processing Speed vs. Accuracy

- Trade-offs between performance and speed

- Different optimizations for different use cases

Key Takeaways

- Overall Leadership

- Claude 3.5 Sonnet (new) leads in most categories

- Shows significant improvements over its predecessor

- Sets new benchmarks in several key areas

- Specialized Excellence

- Different models show strengths in specific areas

- Haiku optimized for speed and efficiency

- Gemini strong in mathematical processing

- Market Positioning

- Claude family covers broad use case spectrum

- Different models optimal for different applications

- Clear differentiation in capabilities and target uses