In the world of artificial intelligence, innovation is constant, and Mistral AI has once again set the benchmark with their release of the Ministral 3B and Ministral 8B models. These cutting-edge models are designed to enhance on-device computing, delivering fast, efficient, and accurate AI-driven results. Whether you’re looking to deploy smart assistants, run local analytics, or even build autonomous robotics solutions, the Ministral models are perfect for your needs. In this article, we’ll walk you through how to download and deploy Ministral 3B and Ministral 8B models on a Windows system.

What Makes Ministral 3B and 8B Stand Out?

Ministral 3B and 8B are part of a new frontier in AI modeling, designed for on-device and at-the-edge use cases. Here’s why these models stand out:

- High Efficiency: Both models leverage state-of-the-art architecture that allows for fast, memory-efficient inference, making them ideal for real-time applications.

- Large Context Windows: The models support up to 128k context length (with 32k currently available on vLLM), ensuring they can handle complex, multi-step workflows efficiently.

- Versatile Use Cases: From handling function calls in agentic workflows to acting as intermediaries between larger models, Ministral 3B and 8B can be tuned for various specialized tasks, offering high performance with low latency.

- Multi-language and Emotion Support: These models are trained on extensive datasets that allow them to operate fluently in different languages, making them versatile for global applications.

Why Download and Deploy Locally?

Deploying AI models locally offers several advantages:

Privacy: Running models locally ensures that sensitive data remains on your device, providing greater control and confidentiality.

Reduced Latency: Local deployment eliminates the need to interact with cloud servers, allowing faster response times and more efficient real-time applications.

Cost Efficiency: While cloud-based models may incur ongoing costs, running the models locally avoids the pay-per-token system and other usage fees.

How to Download and Deploy Ministral 3B and 8B on Windows or Mac

Step 1: Ensure Your System Meets the Requirements

Before downloading the models, ensure your Windows or Mac machine is capable of running these AI models. You’ll need:

A GPU is needed for larger models, but you can also run smaller models on a CPU.

Step 2: Download the Ministral Models

Visit huggingface page to get access to the Ministral 3B and Ministral 8B models. For commercial use, you can reach out to Mistral AI for a commercial license, and they’ll assist with the lossless quantization of the models for your specific use cases.

Alternatively, you can deploy the models from the cloud with the following APIs:

Ministral 3B: ministral-3b-latest

Ministral 8B: ministral-8b-latest

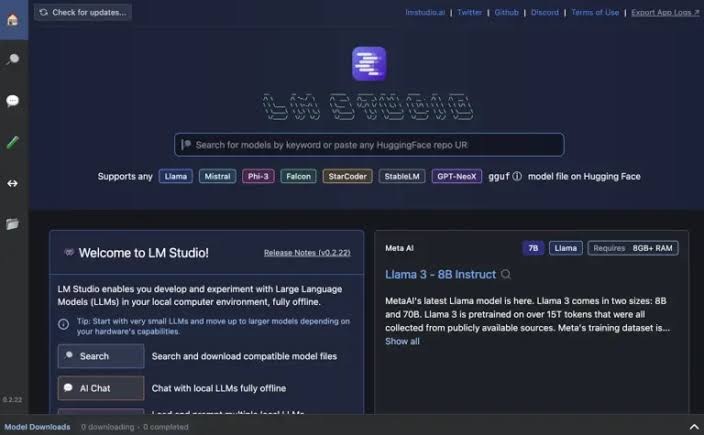

Step 3 : Use LM studio or Jan

You can visit LM Studio to download the application for your device,Next you need to download and load the ministral 3b or Ministral 8b quantization model to chat with the llm locally

Step 4: Customize for Specific Use Cases

The Ministral models are versatile and can be customized for various specialized tasks, such as:

On-Device Translation: Deploy models that can translate text locally without internet connectivity.

Smart Assistants: Build real-time virtual assistants that run on your machine.

Local Analytics: Process data and make real-time decisions without relying on cloud servers.

Mistral AI also offers support for function-calling workflows, where Ministral models can serve as intermediaries between larger models and external APIs.

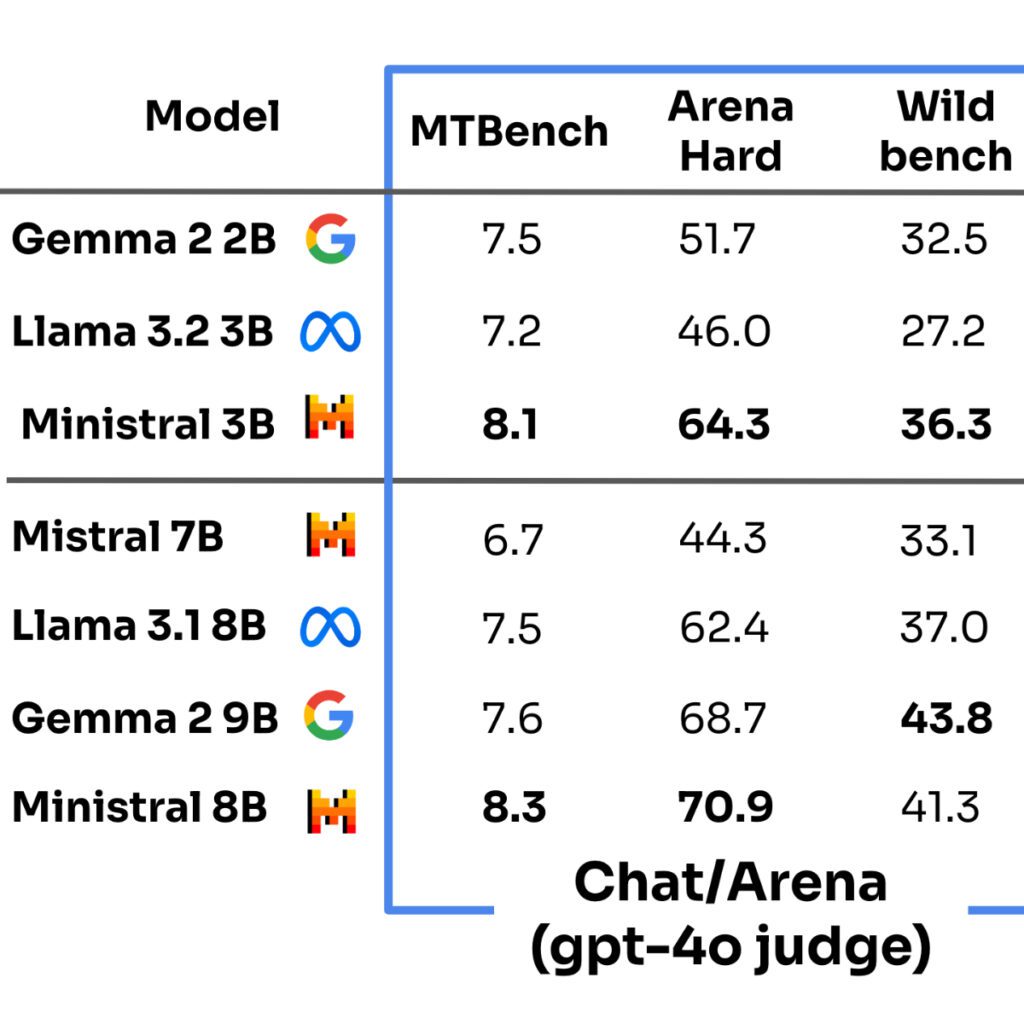

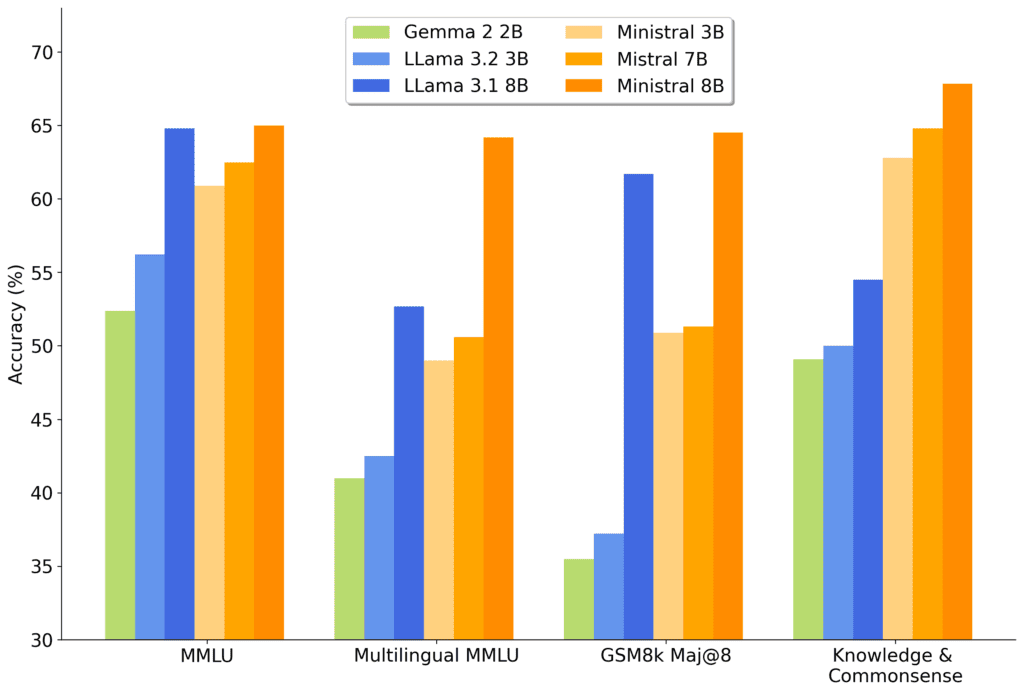

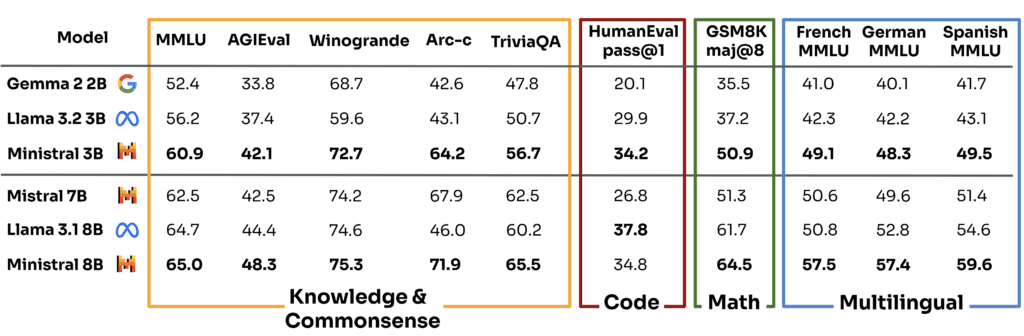

Performance and Benchmarking

Mistral AI has tested the Ministral 3B and Ministral 8B models extensively, and they consistently outperform peers like Llama and Gemma across multiple benchmarks. The models excel in:

Knowledge Representation

Commonsense Reasoning

Function-Calling

Multi-Language Support

The Ministral 8B model is particularly suited for memory-efficient inference thanks to its interleaved sliding-window attention pattern, which reduces the computation load while maintaining high-quality outputs.

Pricing and Licensing

Mistral AI offers competitive pricing for their models:

Ministral 3B: $0.04 / M tokens (input and output)

Ministral 8B: $0.1 / M tokens (input and output)

Both models are available under the Mistral Commercial License and the Mistral Research License. For self-deployed use, it’s best to reach out to Mistral AI for commercial licensing tailored to your specific needs.

Summary: Unlock Frontier AI Capabilities with Ministral Models

The Ministral 3B and 8B models represent a significant leap in AI performance for on-device and at-the-edge applications. Whether you’re a hobbyist looking to experiment with AI or a business aiming to deploy smart solutions in real-time environments, these models provide unparalleled efficiency, versatility, and security.

Deploying these models locally on Windowsbor Mac is straightforward, enabling you to leverage the power of Mistral AI without relying on cloud infrastructure. Start exploring the full potential of Ministral 3B and Ministral 8B today and take your AI applications to the next level.